This article first appeared on bicorner.com

In my last article I talked about Time Series Analysis using R and left with simple exponential smoothing. Here I want to talk about more advanced smoothing methods.

Holt’s Exponential Smoothing

If you have a time series that can be described using an additive model with increasing or decreasing trend and no seasonality, you can use Holt’s exponential smoothing to make short-term forecasts.

Holt’s exponential smoothing estimates the level and slope at the current time point. Smoothing is controlled by two parameters, alpha, for the estimate of the level at the current time point, and beta for the estimate of the slope b of the trend component at the current time point. As with simple exponential smoothing, the parameters alpha and beta have values between 0 and 1, and values that are close to 0 mean that little weight is placed on the most recent observations when making forecasts of future values.

An example of a time series that can probably be described using an additive model with a trend and no seasonality is the time series of US Treasury bill contracts on the Chicago market for 100 consecutive trading days in 1981.

We can read in and plot the data in R by typing:

> install.packages("fma")> ustreasseries > plot.ts(ustreasseries)

To make forecasts, we can fit a predictive model using the HoltWinters() function in R. To use HoltWinters() for Holt’s exponential smoothing, we need to set the parameter gamma=FALSE (the gamma parameter is used for Holt-Winters exponential smoothing, as described below).

For example, to use Holt’s exponential smoothing to fit a predictive model for US Treasury bill contracts, we type:

> ustreasseriesforecasts ustreasseriesforecasts

Holt-Winters exponential smoothing with trend and without seasonal component.

Call:

HoltWinters(x = ustreasseries, gamma = FALSE) Smoothing parameters:

alpha:

1 beta : 0.01073

gamma: FALSE

Coefficients:

[,1]

a 85.32000000

b -0.04692665

> ustreasseriesforecasts$SSE

[1] 8.707433

The estimated value of alpha is 1.0, and of beta is 0.0107. These are both high, telling us that both the estimate of the current value of the level, and of the slope b of the trend component, are based mostly upon very recent observations in the time series. This makes good intuitive sense, since the level and the slope of the time series both change quite a lot over time. The value of the sum-of-squared-errors for the in-sample forecast errors is 8.707.

We can plot the original time series as a black line, with the forecasted values as a red line on top of that, by typing:

> plot(ustreasseriesforecasts)

We can see from the picture that the in-sample forecasts agree pretty well with the observed values, although they tend to lag behind the observed values a little bit.

If you wish, you can specify the initial values of the level and the slope b of the trend component by using the “l.start” and “b.start” arguments for the HoltWinters() function. It is common to set the initial value of the level to the first value in the time series (92 for the Chicago market data), and the initial value of the slope to the second value minus the first value (0.1 for the Chicago market data). For example, to fit a predictive model to the Chicago market data using Holt’s exponential smoothing, with initial values of 608 for the level and 0.1 for the slope b of the trend component, we type:

> HoltWinters(ustreasseries, gamma=FALSE, l.start=92, b.start=0.1)

Holt-Winters exponential smoothing with trend and without seasonal component.

Call:

HoltWinters(x = ustreasseries, gamma = FALSE, l.start = 92, b.start = 0.1)

Smoothing parameters:

alpha: 1

beta : 0.04529601

gamma: FALSE

Coefficients:

[,1]

a 85.32000000

b -0.08157293

> ustreasseriesforecasts$SSE

[1] 8.707433

As for simple exponential smoothing, we can make forecasts for future times not covered by the original time series by using the forecast.HoltWinters() function in the “forecast” package. For example, our time series data for the Chicago market was for 0 to 100 days, so we can make predictions for 101-120 (20 more data points), and plot them, by typing:

> ustreasseriesforecasts2> plot.forecast(ustreasseriesforecasts2)

The forecasts are shown as a blue line, with the 80% prediction intervals as an gray shaded area, and the 95% prediction intervals as a light gray shaded area.

As for simple exponential smoothing, we can check whether the predictive model could be improved upon by checking whether the in-sample forecast errors show non-zero autocorrelations at lags 1-20. For example, for the Chicago market data, we can make a correlogram, and carry out the Ljung-Box test, by typing:

> acf(ustreasseriesforecasts2$residuals, lag.max=20)> Box.test(ustreasseriesforecasts2$residuals, lag=30, type="Ljung-Box")

Box-Ljung test

data: ustreasseriesforecasts2$residuals

X-squared = 30.4227, df = 30, p-value = 0.4442

Here the correlogram shows that the sample autocorrelation for the in-sample forecast errors at lag 15 exceeds the significance bounds. However, we would expect one in 20 of the autocorrelations for the first twenty lags to exceed the 95% significance bounds by chance alone. Indeed, when we carry out the Ljung-Box test, the p-value is 0.4442, indicating that there is little evidence of non-zero autocorrelations in the in-sample forecast errors at lags 1-20.

As for simple exponential smoothing, we should also check that the forecast errors have constant variance over time, and are normally distributed with mean zero. We can do this by making a time plot of forecast errors, and a histogram of the distribution of forecast errors with an overlaid normal curve:

> plot.ts(ustreasseriesforecasts2$residuals) # make a time plot

> plotForecastErrors(ustreasseriesforecasts2$residuals) # make a histogram

The time plot of forecast errors shows that the forecast errors have roughly constant variance over time. The histogram of forecast errors show that it is plausible that the forecast errors are normally distributed with mean zero and constant variance.

Thus, the Ljung-Box test shows that there is little evidence of autocorrelations in the forecast errors, while the time plot and histogram of forecast errors show that it is plausible that the forecast errors are normally distributed with mean zero and constant variance. Therefore, we can conclude that Holt’s exponential smoothing provides an adequate predictive model for the US Treasury bill contracts on the Chicago market, which probably cannot be improved upon. In addition, it means that the assumptions that the 80% and 95% predictions intervals were based upon are probably valid.

Holt-Winters Exponential Smoothing

If you have a time series that can be described using an additive model with increasing or decreasing trend and seasonality, you can use Holt-Winters exponential smoothing to make short-term forecasts.

Holt-Winters exponential smoothing estimates the level, slope and seasonal component at the current time point. Smoothing is controlled by three parameters: alpha, beta, and gamma, for the estimates of the level, slope b of the trend component, and the seasonal component, respectively, at the current time point. The parameters alpha, beta and gamma all have values between 0 and 1, and values that are close to 0 mean that relatively little weight is placed on the most recent observations when making forecasts of future values.

An example of a time series that can probably be described using an additive model with a trend and seasonality is the time series of the US Birth data we have already used (discussed in previous article: “What is Time Series Analysis?)

> birthtimeseriesforecasts birthtimeseriesforecasts

Holt-Winters exponential smoothing with trend and additive seasonal component.

Call:

HoltWinters(x = birthtimeseries) Smoothing

parameters:

alpha: 0.5388189

beta : 0.005806264

gamma: 0.1674998

Coefficients:

[,1]

a 279.37339259

b -0.07077833

s1 -24.22000167

s2 -1.13886091

s3 -19.75018141

s4 -10.15418869

s5 -12.58375567

s6 11.96501002

s7 20.74652186

s8 18.21566037

s9 12.93338079

s10 -4.86769013

s11 4.49894836

s12 -4.59412722

> birthtimeseriesforecasts$SSE

[1] 19571.77

The estimated values of alpha, beta and gamma are 0.5388, 0.0058, and 0.1675, respectively. The value of alpha (0.5388) is relatively low, indicating that the estimate of the level at the current time point is based upon both recent observations and some observations in the more distant past. The value of beta is 0.0058, indicating that the estimate of the slope b of the trend component is not updated over the time series, and instead is set equal to its initial value. This makes good intuitive sense, as the level changes quite a bit over the time series, but the slope b of the trend component remains roughly the same. In contrast, the value of gamma (0.1675) is low, indicating that the estimate of the seasonal component at the current time point is based upon less recent observations.

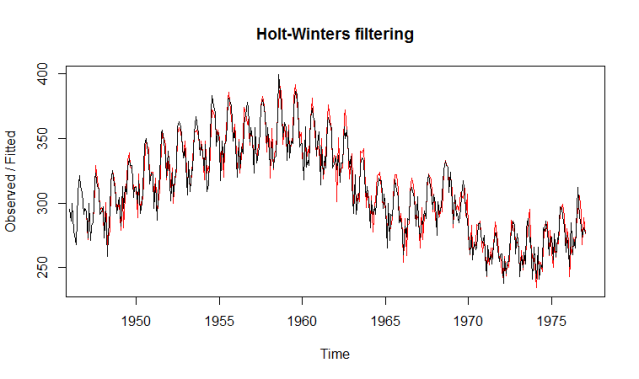

As for simple exponential smoothing and Holt’s exponential smoothing, we can plot the original time series as a black line, with the forecasted values as a red line on top of that:

> plot(birthtimeseriesforecasts)

We see from the plot that the Holt-Winters exponential method is very successful in predicting the seasonal peaks, which occur roughly in November every year.

To make forecasts for future times not included in the original time series, we use the “forecast.HoltWinters()” function in the “forecast” package. For example, the original data for the monthly live births (adjusted) in thousands for the United States, is from January 1946 to January 1977. If we wanted to make forecasts for February 1977 to January 1981 (48 more months), and plot the forecasts, we would type:

> birthtimeseriesforecasts2 plot.forecast(birthtimeseriesforecasts2)

The forecasts are shown as a blue line, and the gray and light gray shaded areas show 80% and 95% prediction intervals, respectively.

We can investigate whether the predictive model can be improved upon by checking whether the in-sample forecast errors show non-zero autocorrelations at lags 1-20, by making a correlogram and carrying out the Ljung-Box test:

> acf(birthtimeseriesforecasts2$residuals, lag.max=30)

> Box.test(birthtimeseriesforecasts2$residuals, lag=30, type="Ljung-Box")

Box-Ljung test

data: birthtimeseriesforecasts2$residuals

X-squared = 81.1214, df = 30, p-value = 1.361e-06

The correlogram shows that the autocorrelations for the in-sample forecast errors do not exceed the significance bounds for lags 1-20. Furthermore, the p-value for Ljung-Box test is 0.6, indicating that there is little evidence of non-zero autocorrelations at lags 1-20.

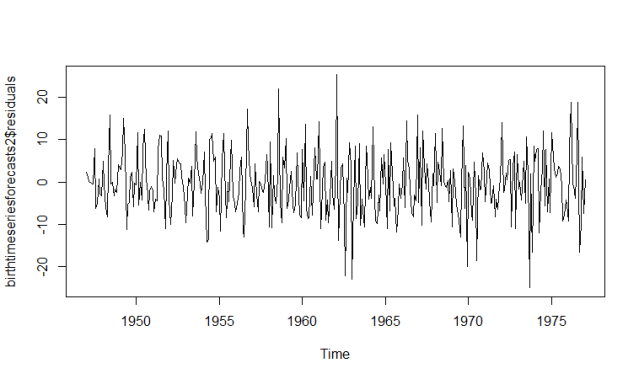

We can check whether the forecast errors have constant variance over time, and are normally distributed with mean zero, by making a time plot of the forecast errors and a histogram (with overlaid normal curve):

> plot.ts(birthtimeseriesforecasts2$residuals) # make a time plot

> plotForecastErrors(birthtimeseriesforecasts2$residuals) # make a histogram

From the time plot, it appears plausible that the forecast errors have constant variance over time. From the histogram of forecast errors, it seems plausible that the forecast errors are normally distributed with mean zero.

Thus, there is little evidence of autocorrelation at lags 1-20 for the forecast errors, and the forecast errors appear to be normally distributed with mean zero and constant variance over time. This suggests that Holt-Winters exponential smoothing provides an adequate predictive model of the monthly live births (adjusted) in thousands for the United States, 1946-1979, which probably cannot be improved upon. Furthermore, the assumptions upon which the prediction intervals were based are probably valid.

Authored by: Jeffrey Strickland, Ph.D.

Jeffrey Strickland, Ph.D., is the Author of “Predictive Analytics Using R” and a Senior Analytics Scientist with Clarity Solution Group. He has performed predictive modeling, simulation and analysis for the Department of Defense, NASA, the Missile Defense Agency, and the Financial and Insurance Industries for over 20 years. Jeff is a Certified Modeling and Simulation professional (CMSP) and an Associate Systems Engineering Professional. He has published nearly 200 blogs on LinkedIn, is also a frequently invited guest speaker and the author of 20 books including:

- Operations Research using Open-Source Tools

- Discrete Event simulation using ExtendSim

- Crime Analysis and Mapping

- Missile Flight Simulation

- Mathematical Modeling of Warfare and Combat Phenomenon

- Predictive Modeling and Analytics

- Using Math to Defeat the Enemy

- Verification and Validation for Modeling and Simulation

- Simulation Conceptual Modeling

- System Engineering Process and Practices

- Weird Scientist: the Creators of Quantum Physics

- Albert Einstein: No one expected me to lay a golden eggs

- The Men of Manhattan: the Creators of the Nuclear Era

- Fundamentals of Combat Modeling

- LinkedIn Memoirs

- Quantum Phaith

- Dear Mister President

- Handbook of Handguns

- Knights of the Cross: The True Story of the Knights Templar

No comments:

Post a Comment